ETL Process (Extract Transform Load)

Overview

Data mining is now a key aspect of an effective sector on a day when we see the new consumer situation. Business associates spend more on the collection of data, as the quantity and variety of data are increasing fast.

Data preparation, collection, and maintenance are really necessary in order to do efficient business. ETL has been effective for business operations to flow seamlessly since the last decade.

The core principles for analyzing large data are data mining and data warehousing. Take also best management decisions and plans that maximize the organization’s sales and benefit.

Data Warehouse is the compilation of historical data from various sources (homogeneous/heterogeneous data) and is used to review and querying data to extract any useful and knowledgeable information.

Data Warehouse is used to store data with various sources in Data Warehouse, which can consist of a text file, photos, spreadsheets, organizational data, relationship/non-relational data, etc.

The heterogeneous data are known as “data pre-processing” must be cleaned with a view to handling contaminated data.

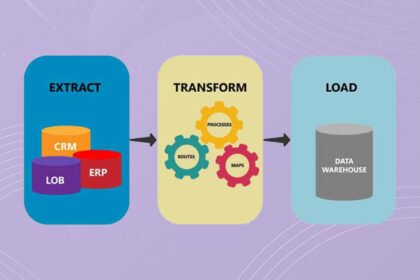

The whole procedure is referred to as ETL Process, after data pre-procession, constant data from various outlets will be pulled and loaded to the Data Warehouse.

ETL method used mostly for data purification, data analysis, loading of data. The loaded data are important and used for various purposes by the end-user. The three major steps of an ETL process can be seen below:

A key aspect of the ETL process is the sequential synthesis, transformation, and loading stages.

Extraction:

Periodically, data is collected/extracted from source data files according to company specifications. The extract relies on the references and market criteria that are available.

In the process of extraction, validation rules are used to filter the results. The extraction method will have to change the validity rule in order to allow data based on company conditions for movement to the next stage. It is not a single operation, updates are periodically implemented from the source.

Changes to derived data can be produced using different methods:

- Notifying System

- Incremental Update

- Full Extraction

- Online Extraction

Data can not be stored directly at the warehouse in the “Warehouse Staging Area” which is a temporary data storage area often, according to the requirement.

The collected data is converted to the Data Warehouse and Warehouse to Data Mart from the source file.

Transformation:

Data extraction must be done in formats that are compliant with standardized and predefined data warehouses.

A selection of rules is applied in this phase in order, for business process reasons, to transform data into functional and organized data.

It includes the following steps:

- Cleaning: Mapping of specific meaning by code (For example, set the null value to 0, Male to ‘M,’ Female to ‘F’).

- Deriving: Create new values by combining old ones (example, Total from price and quantity).

- Sorting: Sort data for fast retrieval based on a given value.

- Joining: To create default values, enter data from a variety of sources (lookup & merge).

- Validation: During DW refreshment, any value will change, resulting in DW inconsistency. We may maintain the old values in another area and substitute the new value for the “Slowly Dimension Change” problem by applying the validation rules. For the next few days, there will be more opportunities for dealing with this matter.

Data will be processed in the staging area for further analysis until each stage is completed.

The transfer will be continued from staging if there is a concern.

Load:

In Data Warehouse it will load the transformed (structured) data to their respective table.

Consistency of the data needs to be kept and at loading, time documents should be changed. In order to ensure data continuity, referential accuracy should also be upheld.

Data can also be loaded into Data Marts using the loading stage (Subject Oriented Data).

Two methods of loading can be used:

- Record by record

- Bulk Load

Bulk load is the best approach for loading data into the warehouse since it has less overhead than a record by record strategy to increase performance.

Data loading can be partitioned to boost the query output table. A time interval technique can be used to divide tables (interval like year, month, quarter etc.).

On the market, there are a variety of ETL resources.

Open Source Software:

- Data Incorporation in Pentaho (KETTLE)

- ETL4ALL – IKAN

- GeoKettle

- ETL by Jaspersoft

- KETL

- Talend Open Studio –

- CloverETL

Commercial Instruments:

- Websphere DataStage by IBM (Formerly known as Ascential DataStage)

- DataFlow Category 1 Software (Sagent)

- Cognos Data Manager by IBM (Formerly known as Cognos DecisionStream)

- Data Integration Studio (SAS)

- Transformation Manager at ETL Solutions Ltd.

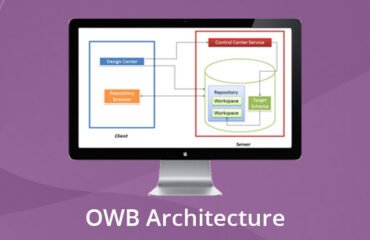

- Oracle – Warehouse Constructor

- Data Integrated Suite ETL by Sybase

- BusinessObjects Data Integrator (SAP)

- SQL Server Integration Services (Microsoft)

- Expressor Semantic Data Integration System is a product of Expressor Software.

- Elixir Repertoire – Elixir

- Oracle Data Integrator is a program that allows you to combine data from various sources (Formerly known as Sunopsis Data Conductor)

- Data Integrator – Pervasive

- Data Integrator for Business Objects

- Data Migrator – Information Builders

- DT/Studio of Embarcadero Technologies

- AB Initio

- DB2 Warehouse Edition by IBM

- Power Center by Informatica